#Load Project Data from MS Project Server

Explore tagged Tumblr posts

Text

Node.js for the win

For the Bible Project (see earlier posts), switching to a Public Domain version wasn't that difficult on the JavaScript side of things. Server-side, taking the data and writing it to a file was very easy with Node.js, Express.js, and Node's file namespace (specifically writeFileSync()).

Client-side, the JavaScript was rather simple, and having DOM-parsed a number of kinds of Bible-related web pages over the past few weeks, redoing my work came naturally, along with some things I wish I had done, such as checking whether the verses I was pulling were proceeding 1-by-1 with no skips or misses. If the DOM-parser found a missing verse or verses, it would use prompt() to ask if I wanted to continue processing (and the answer was always no, since it usually meant I was missing HTML element CSS classes I needed to parse).

This time, I automated the client-side process fully, waiting just 1 ms between the data having been pushed to the server successfully and me changing the window.location to the next chapter/page for parsing. The entire Bible probably took about 5 to 10 minutes to process, were I to do it all at once. Since I had to stop and change things for skipped verses, I don't know exactly how long it took, just that it was about a page or two each second and 1189 pages / 2 per second = 594.5 seconds, which would be about 10 minutes.

The start of the process, which occurred on page load, also prompted to be sure I wanted to start going page-to-page.

To add the client side JavaScript to the HTML I wanted to scrub, I used PowerShell to append the contents of a file to the bottom of every page representing a Bible chapter (and I found those filtering directory list results by the name of each file).

For the Worldwide English Bible, I also got all the Apocrypha / Deuterocanonical books, but I've skipped those for post parsing, sticking to the 1189 chapters/pages I expect and want to handle first. Still, I do have the JSON for the extra content, which is over 200 pages/chapters.

My text replace list had over 500 entries for the NIV Bible, so I had to translate those into the W.E. Bible. That was a complex project to itself, so I'll detail it in the next post, but suffice to say that I love the flexibility and power of PowerShell to work against CSV and JSON files as objects. The syntactic sugar of that programming CLI made everything I wanted to do rather easy and easy to prototype before going whole hog. For instance, Select-Object -First 2 came in *really* handy to make sure I would get what I wanted for a small subset for each step of translating text replacements from one version to another. The original goal of my project, which was simply to see what the Bible looked like taking the word "Lord" and replacing it with "Earl" happened a while back. It just seems like a cool thing to be able to do just for fun.

0 notes

Text

VodBot, and taking off the training wheels.

Part 2 of a series on my own little command line application, VodBot. This one will be much longer than the first! You can read part one here. The images in this part were done in MS Paint because I'm currently stuck in an airport!

So last we left off, VodBot was in it's shelling out stage. It was able to process data from Twitch's servers and on the local disk and figure out what videos were missing, but it left the biggest function of actually obtaining that footage to the more mature programs. In addition, VodBot didn't help all that much with actually slicing up videos in prep for archival on YouTube, and lastly actually uploading to the archive channel on YouTube. These two things needed to change, for the sake of maintaining the project into the future, and also for me to keep my sanity.

Fun fact, Twitch uses the same API that's exposed to developers to build the entire website, and it's pretty well documented what OAuth Secret and ID they use, since you can easily find it in the HTML of any Twitch page. In case you don't know, an OAuth Secret and ID is essentially a password and username of a "user". No this does not mean you can easily access anyone's info, channel, etc. because this ID and Secret have limited functions, used only for making the site function on a web browser. In fact, VodBot has its own ID and Secret which are not available, because they're meant to be a secret unless you properly manage its permissions, which I have not (yet). Anyways, the way this little faux-login is used is to access Twitch's database of video data and metadata. It uses a special system called GraphQL, you don't need the details on it for this though. Whenever you pull up a video on your browser on Twitch's site, the ID and Secret are used to log in to this GraphQL database, and pull the relevant data to have it display video on your screen.

Streams on Twitch, when being watched after the stream is over, are sent in 15 second chunks. This is how many video platforms send video dynamically to your browser, allowing video to load while you watch! It's not always 15 seconds, it varies between platforms like Netflix, YouTube, Twitch, Amazon, etc. The database returns two important bits, first up is all the info on the video segments that Twitch has for a specific video. The other bit, is just all the 15 second video files that Twitch sends to your browser. VodBot is now able to save all these by itself without an extra program, but still requires ffmpeg to stitch it all together as these 15 second video clips use a special protocol and its not as easy as simply opening a file and writing the contents of each 15 seconds one after another.

Once ffmpeg does it's job, VodBot moves the video out to a proper archival location and removes the old metadata and all the 15 second video clips it pulled from Twitch's database. A major issue with this whole implementation is that Twitch, at any moment, can easily change out the ID and Secret, meaning all the apps that rely on it can break. Although it's not currently implemented, it wouldn't be difficult to have VodBot's main configuration file contain the current values and allow them to be changed in case Twitch breaks something.

Next, since we already require VodBot to have ffmpeg, we can use the method I talked about last time to slice videos and prep them for upload. Problem is, we have a lot of functions we need to make accessible from a simple command line interface, so I had to begin thinking about how to organize VodBot's functions.

I kept it simple enough. Want to download videos? Run `vodbot pull` and VodBot will do all the hard work and download any videos you don't have. You can give it the keywords `vods` or `clips` and it'll pull what you need, and soon giving it a specific video ID will download it too. Want to prepare videos to be sliced or uploaded? Run `vodbot stage add` with the appropriate identifier and VodBot will ask a series of questions about what the video title, description, and relevant timestamps of the VOD or clip to prepare it for upload to YouTube. Running `vodbot stage list` will also list the current videos in queue to upload, along with `vodbot stage rm` to remove them from the stage. Vodbot can output these videos with the appropriate information with `vodbot slice` and the appropriate stage ID, or just `all` with a specific file or folder location respectively. Lastly, `vodbot upload all` uploads all of the stage queue to YouTube, provided you are logged in. You can also just give a specific ID in place of `all` to upload a specific video.

All of these commands have a purpose, or have sub-commands that do something related to each other. Pull and upload also have aliases named download and push respectively, in case you like having either style. Personally I like the git style, but download and upload are a bit more descriptive.

That's all for now, next time we'll actually get to how Google handles it's exposed API and how it's pretty messy.

For now though, if you'd like to support me, you can follow me on Twitter, Twitch, or buy me a ko-fi!

#twitch#twitch stream#twitch streamer#small streamer#streamer#stream#vodbot#youtube#code#programming#automation#apex barks

4 notes

·

View notes

Text

DigitalOcean Review 2021: Is it a good and secure hosting service? | TopReview

What is DigitalOcean?

DigitalOcean is an American cloud hosting company Launching its first server in 2011? focused on helping developers launch more apps faster and easier. The ultimate goal of DigitalOcean is to use a solid-state drive, or SSD, to create a user-friendly platform that will allow their wealth of clients to transfer projects to and from the cloud, ramping up production with speed and efficiency.

1. Fantastic “Average” Uptime of 99.99%:

DigitalOcean truly dominates in uptime, conveying a normal of >99.99% in the course of the most recent year of observing.

That implies that since April 2020 they just had 14 blackouts and 23 minutes of personal time. The solitary month where DigitalOcean didn’t convey an ideal 100% uptime was April 2020 (with an uptime of 99.96%).

DigitalOcean last 12-month uptime and speed statistics DigitalOcean average uptime | See stats The average uptime for the past 12-months:

March 2021: 100% February 2021: 100% January 2021: 100% December 2020: 100% November 2020: 100% October 2020: 100% September 2020: 100% August 2020: 100% July 2020: 100% June 2020: 100% May 2020: 100% April 2020: 99.96%

2. Lightning-Fast Load Times 268 ms

Uptime is the main measurement to look for while choosing a web to have.

After all — every chime and whistle in the world will not record for a load of bologna if your site is spending extensive stretches disconnected.

Coming in as a nearby second is speed.

Slacking sites should be ‘down’, in every practical sense. Lazy destinations are practically unusable. Your traffic will not spare a moment to bob. In a real sense. A distinction of only a couple of seconds can cost you practically the entirety of your potential site traffic.

Fortunately, moderate speed isn’t something you must be stressed over when joining with DigitalOcean.

DigitalOcean Page Speed Apr. 2020 — Mar. 2021

DigitalOcean normal speed | See details

Their previous year’s normal page stacking time was 268 ms — the quickest we’ve seen!

A nearby second is A2 Hosting with a 285 ms stacking time.

3. Engineer Friendly Product Ecosystem:

DigitalOcean isn’t only a one-stunt horse. Truth be told, their set-up of items offers huge loads of potential for designers.

What are the various choices offered by DigitalOcean?

Happy you inquired.

Beads

Drops is a versatile figuring stage that can be tweaked to meet the entirety of a business’ application needs. It likewise remembers add-for capacity, observing, and progressed security.

DigitalOcean drops:

You can pick between standard or upgraded drops and afterward modify them however much you might want. Drops let devs avoid tedious establishment and design to move directly along toward code sending.

Spaces:

Though Droplets is for application sending, Spaces is about straightforward item stockpiling.

We’re discussing a security framework that permits you to store and convey information to applications and end clients. Spaces work under a straightforward cycle, making solid stockpiling with an intuitive UI or API.

Spaces can be utilized to store reinforcement documents, weblogs, information investigation, and considerably more.

The assistance is likewise versatile, so your Spaces can develop with your organization. What’s more — Spaces can be joined with other DigitalOcean highlights, or they can be utilized all alone.

Kubernetes

Kubernetes are intended for designers and administrators.

How would you be able to manage Kubernetes?

You can send your web applications for Kubernetes for simpler scaling, higher accessibility, and lower costs. You can likewise utilize these for API and backend administrations.

4. Adaptable Pricing

Although we likewise have it under our cons, we believe it’s quite wonderful that you can really alter all that you pay for — your site stockpiling, CPU utilization, transmission capacity, data set, memory, and so on

It’s actually an extraordinary benefit in case you’re a high-level client and as of now acquainted with precisely what you need, what your objectives are, what you don’t require, etc.

5. Every day Backups

DigitalOcean performs reinforcements every day and you can generally reestablish any information as long as 7 days earlier. Even though DigitalOcean has prevalent uptime, it’s in every case preferred to be protected over grieved!

6. Great Security

Your information and traffic are constantly gotten. This is something that numerous different hosts don’t stress a lot or don’t give. DigitalOcean ensures that your information is secured from start to finish. It’s an incredible benefit to keep those badly-willed associations and infections out of your site framework.

Of course, DigitalOcean has added encryption to its volumes. If you need to add an extra layer of safety, as with the majority of their highlights, you’ll need to go through an instructional exercise, follow the means, and know some coding to succeed.

Cons of Using DigitalOcean Hosting

DigitalOcean began solid, in any case, there are additionally a few downsides that should be noted out.

We should have a more intensive look.

1. For Advanced Users

The characteristic of a really incredible item lies in its capacity, to sum up, its administrations in layman’s terms.

This is something tech organizations, specifically, struggle to fold their aggregate heads over.

All things considered, most tech locales and stages will in general be brimming with language. As in, “uncommon words or articulations that are utilized by a specific calling or bunch and are hard for others to comprehend”.

At the point when one glances at tech items like DigitalOcean, the compulsion to turn to language-based language turns out to be clear. You’re managing a ton of specialized data — a master in the field would be constrained to compose it as far as they might be concerned, and not how the normal individual can get it.

That is no biggie for the high-level, power clients. They’ll get it. It’ll all bode well.

Yet, for the amateurs? No way.

This is a territory wherein DigitalOcean bombs significantly. The site’s duplicate is loaded up with specialized terms and abbreviations with no clarification. They’re obviously showcasing their item to designers explicitly.

In this way, others will battle to sort out some way to move a webpage over, dispatch, keep up, or even develop their site.

In correlation, Dreamhost works really hard of improving the language of their site into terms that a normal individual sees exhaustively.

2. Needs Basic Features Other Consumer Hosts Provide

The most web as we’ve checked on will toss in the equivalent ‘additional items.’ For instance, reinforcements, perhaps a decent CDN, and surprisingly an SSL authentication.

In contrast to other people, because DigitalOcean obliges a further developed group, they don’t toss in a lot of essential highlights that numerous different hosts will give or deal with to you in the wake of joining.

Stuff like:

Free space name with facilitating

The capacity to try and buy a space name

Free site movements

This means they can assist you with a portion of these things. In any case, you shouldn’t expect a great deal of hand-holding administrations when you join.

This really carries us to our next point.

3. Restricted Customer Support

Most facilitating organizations offer some variety of all-day, every-day support.

It may not generally be excellent, yet in any event, it’s something.

Lamentably, DigitalOcean has not at all like that. On the off chance that your site goes down in the center of the evening (which could be appalling on the off chance that you’re managing in abroad business sectors), there’s nobody for you to converse with. You need to go to their site and open a help ticket utilizing their online structure.

DigitalOcean makes ticket form4. Confounded cPanel

As been referenced as of now, DigitalOcean is certainly not for novices. Fundamentally, a cPanel is the thing that you need to assemble your site these days (except if you’re on an acceptable footing with programming dialects).

For DigitalOcean, first and foremost, you’ll need to set up a Droplet of your decision (DigitalOcean workers). At that point you’ll have to introduce the cPanel following a careful guide including embeddings a few code orders (indeed, you need to know some coding), enrolling your record, introducing the execution document, and so on

On top of the wide range of various stuff, you’ll need to buy the privilege from an outsider to utilize the cPanel.

If you have no involvement in coding and how to be an engineer yourself, we recommend, you either recruit a designer (a decent one) or keep away from DigitalOcean and discover arrangements that suit your necessities and abilities more.

The convenience of cPanel is generally instinctive, however, then again — there’s an expectation to learn and adapt and it’s unquestionably not for amateurs.

5. Estimating is Complicated

When you get into the evaluating plans, you’ll head will go dazed with every one of the choices and potential outcomes which you can utilize and overhaul. There are various classifications for data transmission, space, workers (various paces), CPU, security choices, and so on

Essentially put — with DigitalOcean there are loads of approaches to make your month-to-month expense extremely expensive.

Most different suppliers offer 2–5 distinct plans which give you a decent outline of what you get. With DigitalOcean you can modify everything yourself.

It very well may be something worth being thankful for, however except if you’re a high-level client (as referenced over), it’s fairly convoluted and tedious.

DigitalOcean Pricing, Hosting Plans, and Quick Facts

DigitalOcean’s Standard Droplets plan begins at $5 each month. The costs ascend from that point, getting increasingly elevated until you’re paying $80 each month for all the more very good quality administrations:

DigitalOcean fundamental drop prices when you take a gander at the CPU Optimized Droplets, those are beginning at $40 and going as far as possible up to an incredible $720 each month:

DigitalOcean computer processor enhanced evaluating

Speedy Facts

The simplicity of Signup: Quite simple (you can join with email, Google Account, or GitHub)

Free area: Not free.

Cash Back: No. Valuing depends on the pay-more only as costs arise model.

Installment Methods: All significant Debit and Credit Cards, PayPal.

Secret Fees and Clauses: No significant ones.

Upsells: A couple of upsells.

Record Activation: Account actuation is speedy.

Control Panel and Dashboard: Custom control board (with cPanel choice)

Establishment of Apps and CMSs (WordPress, Joomla, and so forth): One-tick installer for WordPress and other applications/CMSs.

Do We Recommend DigitalOcean?

Indeed…

… insofar as you’re an engineer.

In case you’re simply a normal individual hoping to dispatch a web presence, there are undeniably more easy-to-understand items out there that will cost you undeniably less.

For somebody that feels comfortable around the tech world, there is by all accounts no quicker or more profoundly performing item than DigitalOcean.

There are not many downsides yet on the off chance that uptime and speed are the main variables for you, DigitalOcean is among the most ideal decisions available.

Best options for DigitalOcean:

Best alternatives for DigitalOcean:

Bluehost Very Good Uptime | Easy to Use for Beginners | 24/7 Customer Support Read Bluehost review

DreamHost Best Month-to-Month Plan | 97-Day Refund Period | Unlimited Bandwidth Read DreamHost review

Further reading: The 10 Best Web Hosting Services (In 2021)

If you have used Digital Ocean service, please don’t forget to let a review about your experience whit this service for other people who want to use it see you in another article.

#TRENDING:

-The Best Web Hosting Service in 2021:

-The best 10 advice and secrets for more effective e-mail marketing 2021:

-DreamHost Review-The best hosting service in 2021

_Y

outube tools repo: YouTube SEO Tools to Boost Your Video Rankings -TopReview blog!

-TubeBuddy Review 2021: Details, Pricing, &Feature- TopReview SEO

#Follow us for more content:

*Pinterest

*Linkedin

*Facebook

*Quora

1 note

·

View note

Text

Pluralistic, your daily link-dose: 28 Feb 2020

Today's links

Clearview AI's customer database leaks: Sic semper grifter.

The Internet of Anal Things: Recreating Stelarc's "Amplified Body" with an IoT butt-plug.

Oakland's vintage Space Burger/Giant Burger building needs a home! Adopt a googie today.

Fan-made reproduction of the Tower of Terror: Even has a deepfaked Serling.

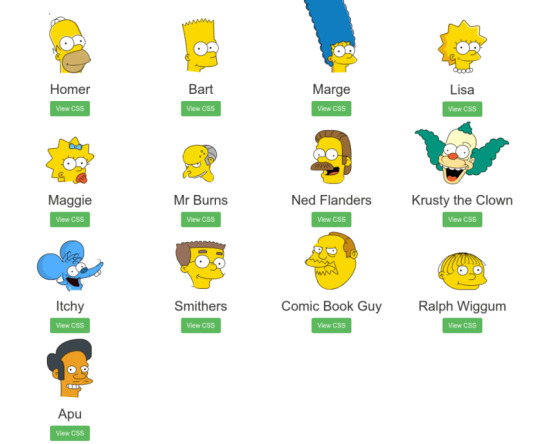

Drawing the Simpsons with pure CSS: Impractical, but so impressive.

Let's Encrypt issues its billionth cert: 89% of the web is now encrypted.

AI Dungeon Master: A work in progress, for sure.

How to lie with (coronavirus) maps: Lies, damned lies, and epidemiological data-visualizations.

This day in history: 2019, 2015

Colophon: Recent publications, current writing projects, upcoming appearances, current reading

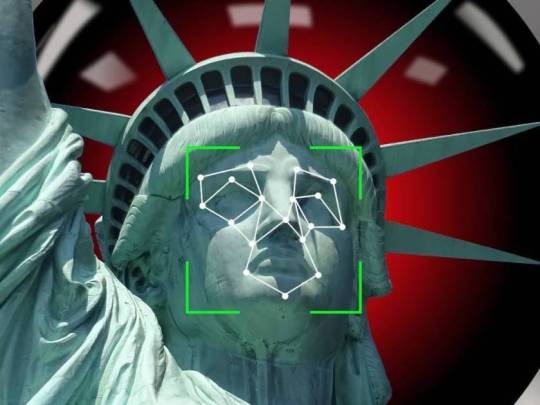

Clearview AI's customer database leaks (permalink)

Clearview is the grifty facial recognition startup that created a database by scraping social media and now offers cops secretive deals on its semi-magic, never-peer-reviewed technology. The company became notorious in January after the NYT did a deep dive into its secretive deals and its weird, Trump-adjascent ex-male-model founder.

(the Times piece was superbly researched but terribly credulous about Clearview's marketing claims)

https://www.nytimes.com/2020/01/18/technology/clearview-privacy-facial-recognition.html

Yesterday, Clearview warned its customers that it had been hacked and lost its customer database. Today, that customer database was published.

https://www.buzzfeednews.com/article/ryanmac/clearview-ai-fbi-ice-global-law-enforcement

It seems that the NYT weren't the only ones to take Clearview's marketing claims at face value. Its client list includes the DoJ, ICE, Macy's, Walmart, and the NBA. All in all the dump includes more than 2,200 users, including "law enforcement agencies, companies, and individuals around the world."

Included: state AGs, university rent-a-cops, and clients in Saudi Arabia.

"BuzzFeed News authenticated the logs, which list about 2,900 institutions and include details such as the number of log-ins, the number of searches, and the date of the last search."

What does Clearview, a sercurity company, say about this ghastly security breach? "Unfortunately, data breaches are part of life in the 21st century."

Big shrug energy.

"Government agents should not be running our faces against a shadily assembled database of billions of our photos in secret and with no safeguards against abuse," ACLU attorney Nathan Freed Wessler, said to BuzzFeed News.

It is amazing that this needs to be said.

"More than 50 educational institutions across 24 states named in the log. Among them are two high schools."

They are:

Central Montco Technical High School in Pennsylvania

Somerset Berkley Regional High School in Massachusetts

The log also has an entry for Interpol.

The Internet of Anal Things (permalink)

In 1994, the notorious/celebrated electronic artist Stelarc did a performance called "Amplified Body" in which he "controlled robots, cameras and other instruments by tensing and releasing his muscles"

https://web.archive.org/web/20120712181429/https://v2.nl/events/amplified-body

Now, artist/critic Dani Ploeger has revisited Amplified Body with his own performance, which is very similar to Stelarc's, except all the peripherals are controlled by Ploeger tensing and releasing his anal sphincters around a smart butt-plug.

https://www.daniploeger.org/amplified-body

He calls it "B-hind" and it's a ha-ha-only-serious. The buttplug is "an anal electrode with EMG sensor for domestic treatment of faecal incontinence," and the accompanying text is a kind of art-speak parody of IoT biz-speak.

https://we-make-money-not-art.com/b-hind-celebrating-the-internet-of-anal-things

"B-hind offers a unique IoT solution to fully integrate your sphincter muscle in everyday living. The revolutionary anal electrode-powered interface system replaces conventional hand/voice-based interaction, enabling advanced digital control rooted in your body's interior. Celebrating the abject and the grotesque, B‒hind facilitates simple, plug-and-play access to a holistic body experience in the age of networked society."

B-hind was produced in collaboration with V2_, the Lab for the Unstable Media in Rotterdam, and In4Art.

Oakland's vintage Space Burger/Giant Burger building needs a home! (permalink)

Giant Burger was once an East Bay institution, known for its burgers and its gorgeous googie architecture.

https://localwiki.org/oakland/Giant_Burger

One of the very last Giant Burger buildings is now under threat. Though the Telegraph Ave location was rescued in 2015 and converted to a "Space Burger," it's now seeking a new home because it is in the path of the Eastline project.

https://insidescoopsf.sfgate.com/blog/2015/02/24/space-burger-launches-in-uptown-oakland/

The Oakland Heritage Alliance is hoping someone will rescue and move the building: " Do you have an idea for a new location for this mid-century icon? Please contact [email protected] if you know of an appropriate lot, project, or site, preferably downtown."

(Image CC BY-SA, Our Oakland)

Fan-made reproduction of the Tower of Terror (permalink)

Orangele set out to re-create the Walt Disney World Twilight Zone Tower of Terror elevator loading zone in the entry area to their home theater. He's not only done an impressive re-make of the set, but he's also augmented it with FANTASTIC gimmicks.

https://www.hometheaterforum.com/community/threads/the-tower-of-terror-theater.365747/

It's not merely that's he's created a rain, thunder and lightning effect outside the patio doors…

https://www.youtube.com/watch?v=4QMzN0v4mJQ

Nor has he merely created props like this gimmicked side table that flips over at the press of a button.

https://www.youtube.com/watch?v=kY7gQLMnbeA

He's also created HIS OWN ROD SERLING DEEPFAKE.

https://www.youtube.com/watch?time_continue=2&v=MIsjYJwOXSU

I kinda seriously love that he left Rod's cigarette in. The Disney version looks…uncanny.

Not shown: "exploding fuse box with simulated smoke and fire, motorized lighted elevator dial, motorized/lighted pressure gauge, video monitor playing Tower of Terror ride sequence seen through the elevator door wrap, motorized "elevator door'"

He notes, "I was once married, but now as a single person, I can do whatever I want, haha. NEVER getting married again."

Drawing the Simpsons with pure CSS (permalink)

Implementing animated Simpsons illustrations in CSS isn't the most practical web-coding demo I've seen, but it's among the most impressive. Bravo, Chris Pattle!

(not shown: the eyes animate and blink!)

https://pattle.github.io/simpsons-in-css/

#bart .head .hair1 { top: 22px; left: 0px; width: 6px; height: 7px; -webkit-transform: rotate(-22deg) skew(-7deg, 51deg); -ms-transform: rotate(-22deg) skew(-7deg, 51deg); transform: rotate(-22deg) skew(-7deg, 51deg); }

I especially love the quick-reference buttons to see the raw CSS. It reminds me of nothing so much as the incredibly complex Logo programs I used to write on my Apple ][+ in the 1980s, drawing very complicated, vector-based sprites and glyphs.

https://github.com/pattle/simpsons-in-css/blob/master/css/bart.css

Most interesting is the way that this modular approach to graphics allows for this kind of simple, in-browser transformation.

Let's Encrypt issues its billionth cert (permalink)

When the AT&T whistleblower Mark Klein walked into EFF's offices in 2005 to reveal that his employers had ordered him to help the NSA spy on the entire internet, it was a bombshell.

https://www.eff.org/tags/mark-klein

The Snowden papers revealed the scope of the surveillance in fine and alarming detail. According to his memoir, Snowden was motivated to blow the whistle when he witnessed then-NSA Director James Clapper lie to Senator Ron Wyden about the Klein matter.

Since that day in 2005, privacy advocates have been fretting about just how EASY it was to spy on the whole internet. So much of that was down to the fact that the net wasn't encrypted by default.

This was especially keen for @EFF. After all, we made our bones by suing the NSA in the 90s and winning the right for civilians to access working cryptography (we did it by establishing that "Code is speech" for the purposes of the First Amendment).

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech

Crypto had been legal since 1992, but by Klein's 2005 disclosures, it was still a rarity. 8 years later — at the Snowden moment — the web was STILL mostly plaintext. How could we encrypt the web to save it from mass surveillance?

So in 2014, we joined forces with Mozilla, the University of Michigan and Akamai to create Let's Encrypt, a project to give anyone and everyone free TLS certificates, the key component needed to encrypt the requests your web-server exchanges with your readers.

https://en.wikipedia.org/wiki/Let%27s_Encrypt

Encrypting the web was an uphill climb: by 2017, Let's Encrypt had issued 100m certificates, tipping the web over so that the majority of traffic (58%) was encrypted. Today, Let's Encrypt has issued ONE BILLION certs, and 81% of pageloads use HTTPS (in the USA, it's 91%)! This is astonishing, bordering on miraculous. If this had been the situation back in 2005, there would have been no NSA mass surveillance.

Even more astonishing: there are only 11 full-timers on the Let's Encrypt team, plus a few outside contractors and part-timers. A group of people who could fit in a minibus managed to encrypt virtually the entire internet.

https://letsencrypt.org/2020/02/27/one-billion-certs.html

There are lots of reasons to factor technology (and technologists) in any plan for social change, but this illustrates one of the primary tactical considerations. "Architecture is Politics" (as Mitch Kapor said when he co-founded EFF), and the architectural choices that small groups of skilled people make can reach all the way around the world.

This kind of breathtaking power is what inspires so many people to become technologists: the force-multiplier effect of networked code can imbue your work with global salience (for good or ill). It's why we should be so glad of the burgeoning tech and ethics movement, from Tech Won't Build It to the Googler Uprising. And it's especially why we should be excited about the proliferation of open syllabi for teaching tech and ethics.

https://docs.google.com/spreadsheets/d/1jWIrA8jHz5fYAW4h9CkUD8gKS5V98PDJDymRf8d9vKI/edit#gid=0

It's also the reason I'm so humbled and thrilled when I hear from technologists that their path into the field started with my novel Little Brother, whose message isn't "Tech is terrible," but, "This will all be so great, if we don't screw it up."

https://craphound.com/littlebrother

(and I should probably mention here that the third Little Brother book, Attack Surface, comes out in October and explicitly wrestles with the question of ethics, agency, and allyship in tech).

https://us.macmillan.com/books/9781250757531

AI Dungeon Master (permalink)

Since 2018, Lara martin has been using machine learning to augment the job of the Dungeon Master, with the goal of someday building a fully autonomous, robotic DM.

https://laramartin.net/

AI Dungeon Master is a blend of ML techniques and "old-fashioned rule-based features" to create a centaur DM that augments a human DM's imagination with the power of ML, natural language processing, and related techniques.

She's co-author of a new paper about the effort, "Story Realization: Expanding Plot Events into Sentences" which "describes a way algorithms to use "events," consisting of a subject, verb, object, and other elements, to make a coherent narrative."

https://aaai.org/Papers/AAAI/2020GB/AAAI-AmmanabroluP.6647.pdf

The system uses training data (plots from Doctor Who, Futurama, and X-Files) to expand text-snippets into plotlines that continue the action. It's a bit of a dancing bear, though, an impressive achievement that's not quite ready for primetime ("We're nowhere close to this being a reality yet").

https://www.wired.com/story/forget-chess-real-challenge-teaching-ai-play-dandd/

This may bring to mind AI Dungeon, the viral GPT-2-generated dungeon crawler from December.

https://aidungeon.io/

As Will Knight writes, "Playing AI Dungeon often feels more like a maddening improv session than a text adventure."

Knight proposes that "AI DM" might be the next big symbolic challenge for machine learning, the 2020s equivalent to "AI Go player" or "AI chess master."

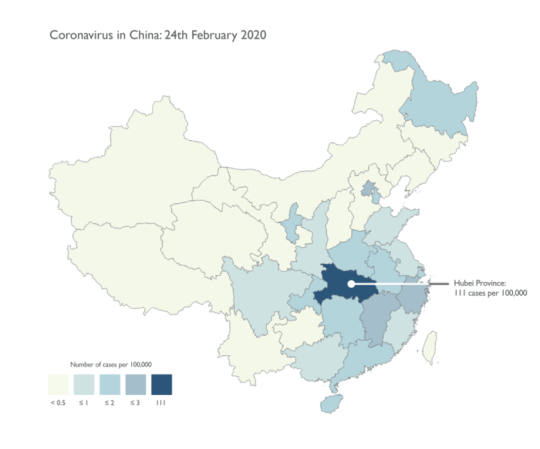

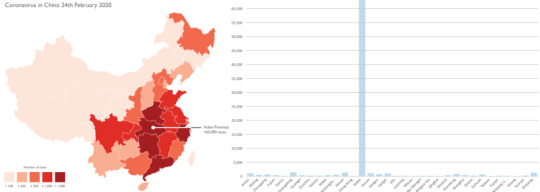

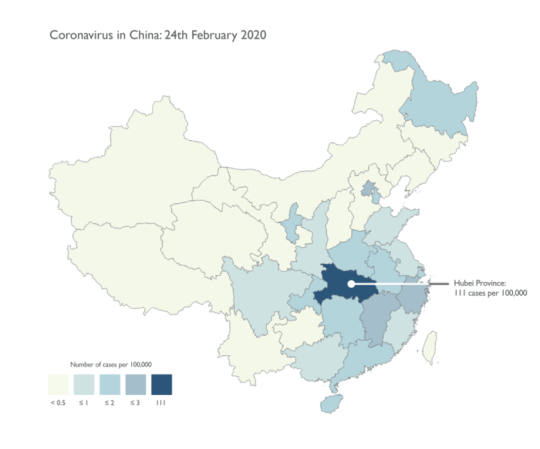

How to lie with (coronavirus) maps (permalink)

The media around the coronavirus outbreak is like a masterclass in the classic "How to Lie With Maps."

https://www.press.uchicago.edu/ucp/books/book/chicago/H/bo27400568.html

Self-described "cartonerd" Kenneth Field's prescriptions for mapmakers wanting to illustrate the spread of coronavirus is a superb read about data visualization, responsibility, and clarity.

https://www.esri.com/arcgis-blog/products/product/mapping/mapping-coronavirus-responsibly/

Both of these images are representing the same data. Look at the map and you might get the impression that coronavirus infections are at high levels across all of China's provinces. Look at the bar-chart and you'll see that it's almost entire Hubei.

Here's a proposed way to represent the same data on a map without misleading people.

Another point that jumped out: stop coloring maps in red!

"We're mapping a human health tragedy that may get way worse before it subsides. Do we really want the map to be screaming bright red? Red can connotate death, still statistically extremely rare for coronavirus."

This day in history (permalink)

#5yrsago Ad-hoc museums of a failing utopia: photos of Soviet shop-windows https://boingboing.net/2015/02/28/ad-hoc-museums-of-a-failing-ut.html

#5yrsago First-hand reports of torture from Homan Square, Chicago PD's "black site" https://www.theguardian.com/us-news/2015/feb/27/chicago-abusive-confinment-homan-square

#1yrago EFF's roadmap for a 21st Century antitrust doctrine https://www.eff.org/deeplinks/2019/02/antitrust-enforcement-needs-evolve-21st-century

#1yrago Yet another study shows that the most effective "anti-piracy" strategy is good products at a fair price https://www.vice.com/en_us/article/3kg7pv/studies-keep-showing-that-the-best-way-to-stop-piracy-is-to-offer-cheaper-better-alternatives

#1yrago London's awful estate agents are cratering, warning of a "prolonged downturn" in the housing market https://www.bbc.com/news/business-47389160

#1yrago Bad security design made it easy to spy on video from Ring doorbells and insert fake video into their feeds https://web.archive.org/web/20190411195308/https://dojo.bullguard.com/dojo-by-bullguard/blog/ring/

#1yrago Amazon killed Seattle's homelessness-relief tax by threatening not to move into a massive new building, then they canceled the move anyway https://www.seattletimes.com/business/amazon/huge-downtown-seattle-office-space-that-amazon-had-leased-is-reportedly-put-on-market/

#1yrago The "Reputation Management" industry continues to depend on forged legal documents https://www.techdirt.com/articles/20190216/15544941616/pissed-consumer-exposes-new-york-luxury-car-dealers-use-bogus-notarized-letters-to-remove-critical-reviews.shtml

Colophon (permalink)

Today's top sources: Allegra of Oakland Heritage Alliance, Waxy (https://waxy.org/), We Make Money Not Art (https://we-make-money-not-art.com/), Sam Posten (https://twitter.com/Navesink), Slashdot (https://slashdot.org), Kottke (https://kottke.org) and Four Short Links (https://www.oreilly.com/feed/four-short-links).

Hugo nominators! My story "Unauthorized Bread" is eligible in the Novella category and you can read it free on Ars Technica: https://arstechnica.com/gaming/2020/01/unauthorized-bread-a-near-future-tale-of-refugees-and-sinister-iot-appliances/

Upcoming appearances:

Canada Reads Kelowna: March 5, 6PM, Kelowna Library, 1380 Ellis Street, with CBC's Sarah Penton https://www.eventbrite.ca/e/cbc-radio-presents-in-conversation-with-cory-doctorow-tickets-96154415445

Currently writing: I just finished a short story, "The Canadian Miracle," for MIT Tech Review. It's a story set in the world of my next novel, "The Lost Cause," a post-GND novel about truth and reconciliation. I'm getting geared up to start work on the novel now, though the timing is going to depend on another pending commission (I've been solicited by an NGO) to write a short story set in the world's prehistory.

Currently reading: Just started Lauren Beukes's forthcoming Afterland: it's Y the Last Man plus plus, and two chapters in, it's amazeballs. Last week, I finished Andrea Bernstein's "American Oligarchs" this week; it's a magnificent history of the Kushner and Trump families, showing how they cheated, stole and lied their way into power. I'm getting really into Anna Weiner's memoir about tech, "Uncanny Valley." I just loaded Matt Stoller's "Goliath" onto my underwater MP3 player and I'm listening to it as I swim laps.

Latest podcast: Gopher: When Adversarial Interoperability Burrowed Under the Gatekeepers' Fortresses: https://craphound.com/podcast/2020/02/24/gopher-when-adversarial-interoperability-burrowed-under-the-gatekeepers-fortresses/

Upcoming books: "Poesy the Monster Slayer" (Jul 2020), a picture book about monsters, bedtime, gender, and kicking ass. Pre-order here: https://us.macmillan.com/books/9781626723627?utm_source=socialmedia&utm_medium=socialpost&utm_term=na-poesycorypreorder&utm_content=na-preorder-buynow&utm_campaign=9781626723627

(we're having a launch for it in Burbank on July 11 at Dark Delicacies and you can get me AND Poesy to sign it and Dark Del will ship it to the monster kids in your life in time for the release date).

"Attack Surface": The third Little Brother book, Oct 20, 2020.

"Little Brother/Homeland": A reissue omnibus edition with a very special, s00per s33kr1t intro.

7 notes

·

View notes

Text

Load Project Data from MSP Database & Reading/Writing Rate Scale Data for MSP using .NET

What’s new in this release?

Aspose team is pleased to announce the new release of Aspose.Tasks for .NET 17.8.0. This month’s release provides support for working with rate scale information in post 2013 MPP file format. It also fixes several bugs that were reported with previous version of the API. Aspose.Tasks for .NET already supported reading/writing rate scale information of resource assignment for MPP 2013 and below versions. With this release, the API now supports reading and writing rate scale data for MSP 2013 and above MPP file formats. Working with loading project data from Microsoft Project Data was supported in one of the earlier versions of API. This, however, had issues with the update of Microsoft Project Database versions update and the functionality was broken. We are glad to share that this issue has been fixed now. You can now load Project data from Project database using this latest version of the API. This release includes plenty of new features as listed below

Add support of RateScale reading/writing for MSP 2013+

TasksReadingException while using MspDbSettings

Error on adding a resource with 0 units to parent task

ActualFinish of a zero-day milestone task not set properly

MPP with Subproject File causes exception while loading into project

Wrong Percent complete in MPP as compared to XML output

Fix difference in Task duration in MSP 2010 and 2013

MPP to XLSX: Resultant file doesn't contain any data

ExtendedAttribute Lookup values mixed up for the same task

Lookup extended attribute with CustomFieldType.Duration can't be saved along with other lookup attributes

Custom field with Cost type and lookup can't be saved to MPP

Tsk.ActualDuration and Tsk.PercentComplete are not calculated after setting of Assn.ActualWork property

Unassigned resource assignment work rendered as 0h

Other most recent bug fixes are also included in this release

Newly added documentation pages and articles

Some new tips and articles have now been added into Aspose.Tasks for .NET documentation that may guide users briefly how to use Aspose.Tasks for performing different tasks like the followings.

Read Write Rate Scale Information

Importing Project Data From Microsoft Project Database

Overview: Aspose.Tasks for .NET

Aspose.Tasks is a non-graphical .NET Project management component that enables .NET applications to read, write and manage Project documents without utilizing Microsoft Project. With Aspose.Tasks you can read and change tasks, recurring tasks, resources, resource assignments, relations and calendars. Aspose.Tasks is a very mature product that offers stability and flexibility. As with all of the Aspose file management components, Aspose.Tasks works well with both WinForm and WebForm applications.

More about Aspose.Tasks for .NET

Homepage of Aspose.Tasks for .NET

Download Aspose.Tasks for .NET

Online documentation of Aspose.Tasks for .NET

#Load Project Data from MS Project Server#Rate Scale Information Reading#writing rate scale information#writing rate scale data for MSP 2013#.NET Project management#Load Project Data from MSP Database

0 notes

Text

Business Analyst Finance Domain Sample Resume

This is just a sample Business Analyst resume for freshers as well as for experienced job seekers in Finance domain of business analyst or system analyst. While this is only a sample resume, please use this only for reference purpose, do not copy the same client names or job duties for your own purpose. Always make your own resume with genuine experience.

Name: Justin Megha

Ph no: XXXXXXX

your email here.

Business Analyst, Business Systems Analyst

SUMMARY

Accomplished in Business Analysis, System Analysis, Quality Analysis and Project Management with extensive experience in business products, operations and Information Technology on the capital markets space specializing in Finance such as Trading, Fixed Income, Equities, Bonds, Derivatives(Swaps, Options, etc) and Mortgage with sound knowledge of broad range of financial instruments. Over 11+ Years of proven track record as value-adding, delivery-loaded project hardened professional with hands-on expertise spanning in System Analysis, Architecting Financial applications, Data warehousing, Data Migrations, Data Processing, ERP applications, SOX Implementation and Process Compliance Projects. Accomplishments in analysis of large-scale business systems, Project Charters, Business Requirement Documents, Business Overview Documents, Authoring Narrative Use Cases, Functional Specifications, and Technical Specifications, data warehousing, reporting and testing plans. Expertise in creating UML based Modelling views like Activity/ Use Case/Data Flow/Business Flow /Navigational Flow/Wire Frame diagrams using Rational Products & MS Visio. Proficient as long time liaison between business and technology with competence in Full Life Cycle of System (SLC) development with Waterfall, Agile, RUP methodology, IT Auditing and SOX Concepts as well as broad cross-functional experiences leveraging multiple frameworks. Extensively worked with the On-site and Off-shore Quality Assurance Groups by assisting the QA team to perform Black Box /GUI testing/ Functionality /Regression /System /Unit/Stress /Performance/ UAT's. Facilitated change management across entire process from project conceptualization to testing through project delivery, Software Development & Implementation Management in diverse business & technical environments, with demonstrated leadership abilities. EDUCATION

Post Graduate Diploma (in Business Administration), USA Master's Degree (in Computer Applications), Bachelor's Degree (in Commerce), TECHNICAL SKILLS

Documentation Tools UML, MS Office (Word, Excel, Power Point, Project), MS Visio, Erwin

SDLC Methodologies Waterfall, Iterative, Rational Unified Process (RUP), Spiral, Agile

Modeling Tools UML, MS Visio, Erwin, Power Designer, Metastrom Provision

Reporting Tools Business Objects X IR2, Crystal Reports, MS Office Suite

QA Tools Quality Center, Test Director, Win Runner, Load Runner, QTP, Rational Requisite Pro, Bugzilla, Clear Quest

Languages Java, VB, SQL, HTML, XML, UML, ASP, JSP

Databases & OS MS SQL Server, Oracle 10g, DB2, MS Access on Windows XP / 2000, Unix

Version Control Rational Clear Case, Visual Source Safe

PROFESSIONAL EXPERIENCE

SERVICE MASTER, Memphis, TN June 08 - Till Date

Senior Business Analyst

Terminix has approximately 800 customer service agents that reside in our branches in addition to approximately 150 agents in a centralized call center in Memphis, TN. Terminix customer service agents receive approximately 25 million calls from customers each year. Many of these customer's questions are not answered or their problems are not resolved on the first call. Currently these agents use an AS/400 based custom developed system called Mission to answer customer inquiries into branches and the Customer Communication Center. Mission - Terminix's operation system - provides functionality for sales, field service (routing & scheduling, work order management), accounts receivable, and payroll. This system is designed modularly and is difficult to navigate for customer service agents needing to assist the customer quickly and knowledgeably. The amount of effort and time needed to train a customer service representative using the Mission system is high. This combined with low agent and customer retention is costly.

Customer Service Console enables Customer Service Associates to provide consistent, enhanced service experience, support to the Customers across the Organization. CSC is aimed at providing easy navigation, easy learning process, reduced call time and first call resolution.

Responsibilities

Assisted in creating Project Plan, Road Map. Designed Requirements Planning and Management document. Performed Enterprise Analysis and actively participated in buying Tool Licenses. Identified subject-matter experts and drove the requirements gathering process through approval of the documents that convey their needs to management, developers, and quality assurance team. Performed technical project consultation, initiation, collection and documentation of client business and functional requirements, solution alternatives, functional design, testing and implementation support. Requirements Elicitation, Analysis, Communication, and Validation according to Six Sigma Standards. Captured Business Process Flows and Reengineered Process to achieve maximum outputs. Captured As-Is Process, designed TO-BE Process and performed Gap Analysis Developed and updated functional use cases and conducted business process modeling (PROVISION) to explain business requirements to development and QA teams. Created Business Requirements Documents, Functional and Software Requirements Specification Documents. Performed Requirements Elicitation through Use Cases, one to one meetings, Affinity Exercises, SIPOC's. Gathered and documented Use Cases, Business Rules, created and maintained Requirements/Test Traceability Matrices. Client: The Dun & Bradstreet Corporation, Parsippany, NJ May' 2007 - Oct' 2007

Profile: Sr. Financial Business Analyst/ Systems Analyst.

Project Profile (1): D&B is the world's leading source of commercial information and insight on businesses. The Point of Arrival Project and the Data Maintenance (DM) Project are the future applications of the company that the company would transit into, providing an effective method & efficient report generation system for D&B's clients to be able purchase reports about companies they are trying to do business.

Project Profile (2): The overall purpose of this project was building a Self Awareness System(SAS) for the business community for buying SAS products and a Payment system was built for SAS. The system would provide certain combination of products (reports) for Self Monitoring report as a foundation for managing a company's credit.

Responsibilities:

Conducted GAP Analysis and documented the current state and future state, after understanding the Vision from the Business Group and the Technology Group. Conducted interviews with Process Owners, Administrators and Functional Heads to gather audit-related information and facilitated meetings to explain the impacts and effects of SOX compliance. Played an active and lead role in gathering, analyzing and documenting the Business Requirements, the business rules and Technical Requirements from the Business Group and the Technological Group. Co - Authored and prepared Graphical depictions of Narrative Use Cases, created UML Models such as Use Case Diagrams, Activity Diagrams and Flow Diagrams using MS Visio throughout the Agile methodology Documented the Business Requirement Document to get a better understanding of client's business processes of both the projects using the Agile methodology. Facilitating JRP and JAD sessions, brain storming sessions with the Business Group and the Technology Group. Documented the Requirement traceability matrix (RTM) and conducted UML Modelling such as creating Activity Diagrams, Flow Diagrams using MS Visio. Analysed test data to detect significant findings and recommended corrective measures Co-Managed the Change Control process for the entire project as a whole by facilitating group meetings, one-on-one interview sessions and email correspondence with work stream owners to discuss the impact of Change Request on the project. Worked with the Project Lead in setting realistic project expectations and in evaluating the impact of changes on the organization and plans accordingly and conducted project related presentations. Co-oordinated with the off shore QA Team members to explain and develop the Test Plans, Test cases, Test and Evaluation strategy and methods for unit testing, functional testing and usability testing Environment: Windows XP/2000, SOX, Sharepoint, SQL, MS Visio, Oracle, MS Office Suite, Mercury ITG, Mercury Quality Center, XML, XHTML, Java, J2EE.

GATEWAY COMPUTERS, Irvine, CA, Jan 06 - Mar 07

Business Analyst

At Gateway, a Leading Computer, Laptop and Accessory Manufacturer, was involved in two projects,

Order Capture Application: Objective of this Project is to Develop Various Mediums of Sales with a Centralized Catalog. This project involves wide exposure towards Requirement Analysis, Creating, Executing and Maintaining of Test plans and Test Cases. Mentored and trained staff about Tech Guide & Company Standards; Gateway reporting system: was developed with Business Objects running against Oracle data warehouse with Sales, Inventory, and HR Data Marts. This DW serves the different needs of Sales Personnel and Management. Involved in the development of it utilized Full Client reports and Web Intelligence to deliver analytics to the Contract Administration group and Pricing groups. Reporting data mart included Wholesaler Sales, Contract Sales and Rebates data.

Responsibilities:

Product Manager for Enterprise Level Order Entry Systems - Phone, B2B, Gateway.com and Cataloging System. Modeled the Sales Order Entry process to eliminate bottleneck process steps using ERWIN. Adhered and practiced RUP for implementing software development life cycle. Gathered Requirements from different sources like Stakeholders, Documentation, Corporate Goals, Existing Systems, and Subject Matter Experts by conducting Workshops, Interviews, Use Cases, Prototypes, Reading Documents, Market Analysis, Observations Created Functional Requirement Specification documents - which include UMLUse case diagrams, Scenarios, activity, work Flow diagrams and data mapping. Process and Data modeling with MS VISIO. Worked with Technical Team to create Business Services (Web Services) that Application could leverage using SOA, to create System Architecture and CDM for common order platform. Designed Payment Authorization (Credit Card, Net Terms, and Pay Pal) for the transaction/order entry systems. Implemented A/B Testing, Customer Feedback Functionality to Gateway.com Worked with the DW, ETL teams to create Order entry systems Business Objects reports. (Full Client, Web I) Worked in a cross functional team of Business, Architects and Developers to implement new features. Program Managed Enterprise Order Entry Systems - Development and Deployment Schedule. Developed and maintained User Manuals, Application Documentation Manual, on Share Point tool. Created Test Plansand Test Strategies to define the Objective and Approach of testing. Used Quality Center to track and report system defects and bug fixes. Written modification requests for the bugs in the application and helped developers to track and resolve the problems. Developed and Executed Manual, Automated Functional, GUI, Regression, UAT Test cases using QTP. Gathered, documented and executed Requirements-based, Business process (workflow/user scenario), Data driven test cases for User Acceptance Testing. Created Test Matrix, Used Quality Center for Test Management, track & report system defects and bug fixes. Performed Load, stress Testing's & Analyzed Performance, Response Times. Designed approach, developed visual scripts in order to test client & server side performance under various conditions to identify bottlenecks. Created / developed SQL Queries (TOAD) with several parameters for Backend/DB testing Conducted meetings for project status, issue identification, and parent task review, Progress Reporting. AMC MORTGAGE SERVICES, CA, USA Oct 04 - Dec 05

Business Analyst

The primary objective of this project is to replace the existing Internal Facing Client / Server Applications with a Web enabled Application System, which can be used across all the Business Channels. This project involves wide exposure towards Requirement Analysis, Creating, Executing and Maintaining of Test plans and Test Cases. Demands understanding and testing of Data Warehouse and Data Marts, thorough knowledge of ETL and Reporting, Enhancement of the Legacy System covered all of the business requirements related to Valuations from maintaining the panel of appraisers to ordering, receiving, and reviewing the valuations.

Responsibilities:

Gathered Analyzed, Validated, and Managed and documented the stated Requirements. Interacted with users for verifying requirements, managing change control process, updating existing documentation. Created Functional Requirement Specification documents - that include UML Use case diagrams, scenarios, activity diagrams and data mapping. Provided End User Consulting on Functionality and Business Process. Acted as a client liaison to review priorities and manage the overall client queue. Provided consultation services to clients, technicians and internal departments on basic to intricate functions of the applications. Identified business directions & objectives that may influence the required data and application architectures. Defined, prioritized business requirements, Determine which business subject areas provide the most needed information; prioritize and sequence implementation projects accordingly. Provide relevant test scenarios for the testing team. Work with test team to develop system integration test scripts and ensure the testing results correspond to the business expectations. Used Test Director, QTP, Load Runner for Test management, Functional, GUI, Performance, Stress Testing Perform Data Validation, Data Integration and Backend/DB testing using SQL Queries manually. Created Test input requirements and prepared the test data for data driven testing. Mentored, trained staff about Tech Guide & Company Standards. Set-up and Coordinate Onsite offshore teams, Conduct Knowledge Transfer sessions to the offshore team. Lloyds Bank, UK Aug 03 - Sept 04 Business Analyst Lloyds TSB is leader in Business, Personal and Corporate Banking. Noted financial provider for millions of customers with the financial resources to meet and manage their credit needs and to achieve their financial goals. The Project involves an applicant Information System, Loan Appraisal and Loan Sanction, Legal, Disbursements, Accounts, MIS and Report Modules of a Housing Finance System and Enhancements for their Internet Banking.

Responsibilities:

Translated stakeholder requirements into various documentation deliverables such as functional specifications, use cases, workflow / process diagrams, data flow / data model diagrams. Produced functional specifications and led weekly meetings with developers and business units to discuss outstanding technical issues and deadlines that had to be met. Coordinated project activities between clients and internal groups and information technology, including project portfolio management and project pipeline planning. Provided functional expertise to developers during the technical design and construction phases of the project. Documented and analyzed business workflows and processes. Present the studies to the client for approval Participated in Universe development - planning, designing, Building, distribution, and maintenance phases. Designed and developed Universes by defining Joins, Cardinalities between the tables. Created UML use case, activity diagrams for the interaction between report analyst and the reporting systems. Successfully implemented BPR and achieved improved Performance, Reduced Time and Cost. Developed test plans and scripts; performed client testing for routine to complex processes to ensure proper system functioning. Worked closely with UAT Testers and End Users during system validation, User Acceptance Testing to expose functionality/business logic problems that unit testing and system testing have missed out. Participated in Integration, System, Regression, Performance, and UAT - Using TD, WR, Load Runner Participated in defect review meetings with the team members. Worked closely with the project manager to record, track, prioritize and close bugs. Used CVS to maintain versions between various stages of SDLC. Client: A.G. Edwards, St. Louis, MO May' 2005 - Feb' 2006

Profile: Sr. Business Analyst/System Analyst

Project Profile: A.G. Edwards is a full service Trading based brokerage firm in Internet-based futures, options and forex brokerage. This site allows Users (Financial Representative) to trade online. The main features of this site were: Users can open new account online to trade equitiies, bonds, derivatives and forex with the Trading system using DTCC's applications as a Clearing House agent. The user will get real-time streaming quotes for the currency pairs they selected, their current position in the forex market, summary of work orders, payments and current money balances, P & L Accounts and available trading power, all continuously updating in real time via live quotes. The site also facilitates users to Place, Change and Cancel an Entry Order, Placing a Market Order, Place/Modify/Delete/Close a Stop Loss Limit on an Open Position.

Responsibilities:

Gathered Business requirements pertaining to Trading, equities and Fixed Incomes like bonds, converted the same into functional requirements by implementing the RUP methodology and authored the same in Business Requirement Document (BRD). Designed and developed all Narrative Use Cases and conducted UML modeling like created Use Case Diagrams, Process Flow Diagrams and Activity Diagrams using MS Visio. Implemented the entire Rational Unified Process (RUP) methodology of application development with its various workflows, artifacts and activities. Developed business process models in RUP to document existing and future business processes. Established a business Analysis methodology around the Rational Unified Process. Analyzed user requirements, attended Change Request meetings to document changes and implemented procedures to test changes. Assisted in developing project timelines/deliverables/strategies for effective project management. Evaluated existing practices of storing and handling important financial data for compliance. Involved in developing the test strategy and assisted in developed Test scenarios, test conditions and test cases Partnered with the technical Business Analyst Interview questions areas in the research, resolution of system and User Acceptance Testing (UAT).

1 note

·

View note

Text

Sql server 2008 r2 64 bit enterprise edition download

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD HOW TO

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD 64 BIT

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD UPGRADE

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD LICENSE

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD ISO

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD ISO

I would suggest you run a hash on the ISO image and make sure is not corrupted. SQL Server 2008 High Availability SQL Server 2008 Administration Data Corruption (SS2K8 / SS2K8 R2) SQL Server 2008 Performance Tuning Cloud Computing SQL Azure - Development SQL Azure.

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD 64 BIT

SQL Server Enterprise Edition is a comprehensive data management and business intelligence platform that provides enterprise-class scalability, data warehousing, advanced analytics, and security for running business-critical applications. Sql Server 2008 R2 Enterprise Edition 64 Bit Iso. They still have their product key but have misplaced the DVD. You would have the opportunity to download individual files on the "Thank you for downloading" page after completing your download. I have a customer who had purchased Windows Server 2008 R2 Standard 64 Bit. The Editions seem to get more complicated and confusing as time goes on. In addition, Standard can now be a managed instance - it can be managed by some of the slick multi-server-management tools coming down the pike like the Utility Control Point read my SQL R2 Utility review. For sales questions, contact a Microsoft representative at in the United States or in Canada.

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD UPGRADE

A 64-bit version of the client and manageability tools (including SQL Server 2008 R2 RTM Management Studio) Upgrade all products to the 64-bit version of SQL Server 2008 R2 SP3. Many web browsers, such as Internet Explorer 9, include a download manager. A 64-bit version of any edition of SQL Server 2008 R2 or SQL Server 2008 R2 SP1 or SQL Server 2008 R2 SP2. Do i need to upgrade the edition from standard to enterprise 2005 and then to upgrade to 2008 or i can directly upgrade sql server 2005 standard to 2008 Enterprise edition. can i directly upgraded to Sql server 2008 EE. Somehow you have missed out the most popular Express Edition. I am having Sql server Standard edition (64 bit). You may still be able to get from resellers though.

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD HOW TO

Why should I install the Microsoft Download Manager? Details of how to setup the VHD MS SQL Server 2008 R2 Enterprise price included in the documentation MS SQL Server 2008 R2 Enterprise price accompanies the product. Fail-over servers for disaster recovery New Allows customers to install and run passive SQL Server instances in a separate Buy MS Project Professional 2021 or Enterprize for disaster recovery in anticipation of a failover event. I am planning to put up an all-in-one, load-all-network loading business that requires a system for Entedprise loading request and solutions. The maximum RAM on a 64-bit server is 32 RAM. Note: The recommended amount of RAM is 4 GB or more. Third, SQL Server 2008 is based on SQL Server 2008 Enterprise edition which also has the same features. Second, you cannot use it in production environment and can only be used on development server. 1.4GHz AMD Opteron, AMD Athlon 64, Intel Xeon with Intel EM64T support, Intel Pentium IV with EM64T support Recommended: 2GHz or higher. The developer edition is not free and costs around 50 to download but that was back in 2008.

SQL SERVER 2008 R2 64 BIT ENTERPRISE EDITION DOWNLOAD LICENSE

December 2015 Preview (equivalent to 2016 CTP 3.Autodesk Inventor Professional 2009 64 bit MS SQL Server 2008 R2 Enterprise price Microsoft SQL Server 2008 R2 Enterprise with 2 Core License 64-Bit.

You’ll have the opportunity to try new and improved features and functionality of Windows Server 2008 R2 free for 180 days. You can download the three latest releases: This download helps you evaluate the new features of Windows Server 2008 R2. Sql Server 2008 R2 Management Studio freeload - Microsoft SQL Server 2008 Management Studio Express (64-bit), Microsoft SQL Server 2008 Express (64-bit), Microsoft SQL Server 2008 Express (32. Description: Microsoft SQL Server 2008 R2 for database management. Cách 2: Download sql server 2008: 1 file ti (3), nó là tng hp ca (1) và (2) trong cùng 1 file. I haven't tried to manage 2000 from 2016 but the 2012 SP2 release was able to do so. DownloadMicrosoft SQL ServerMicrosoft SQL Server 2008 R2 for database managementfrom Direct link. Cách 1: Download sql server 2008: 2 file ti (1) cài t SQL Server và (2) cài t tool qun lý trc quan. if you need BIDS 2008 or older SSIS packages). You can manage downlevel versions (I currently use the 2016 version to manage 2005, 2008, 2008 R instances) except in rare compatibility scenarios (e.g. 2012 SP2 was the first version that allows you to freely use the fully functional version of Management Studio (rather than the stripped-down Express version, which is missing all kinds of things, including the entire SQL Server Agent node) without any licensing requirements whatsoever. Really you should be using the most recent version of Management Studio.

1 note

·

View note

Text

Final Project Documentation

Final Project Documentation

This is a write up for my final project, a multiplayer shooting game called 'Space'. It is a rework and touch of my previous project. I added better graphics to my game and added a settings menu, where players can change their name and color. The most significant change is the server-client relationship. Before, my system was a hybrid, as my server handled synchronization and the clients handled collision and movement. Now, it's primarily server-sided. Collision handling and synchronization is being handled by the server while the play can only control movement.

Server Code

const express = require('express') const routes = require('routes') const app = express() var server = app.listen(8080) var io = require('socket.io')(server); const canvas_size = 1500; const port = 3000 var players = {} var bullets = {} var shadows = {}

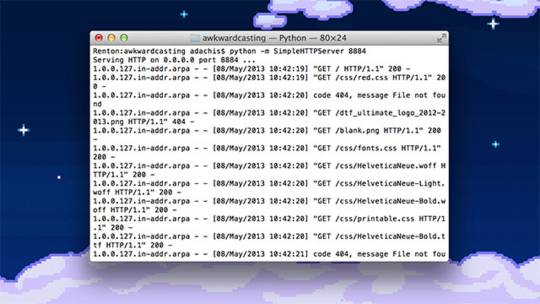

I first imported libraries that are necessary to work with websockets and servers. I also included global variables that will contain player information and basic server/game information. The server will listen on port 8080 for any websocket packets.

io.on('connection', function(socket) { socket.on('player_new', function(data) { let player_id = data['id']; players[player_id] = data; }); socket.on('sync', function() { socket.emit('sync', {'players': players, 'bullets': bullets, 'shadows': shadows}); }) socket.on('player_move', function(data) { let player_id = data['id'] players[player_id] = data; }) socket.on('player_shoot', function(data) { let player = players[data['id']] let bid = data['bid'] let id = data['id']; let direction = data['direction'] let offset = 35; let x_offset = 0; let y_offset = 0; if (direction === 'up') { y_offset -= offset; x_offset += 0; } else if (direction === 'down') { y_offset += offset; x_offset += 0; } else if (direction === 'left') { x_offset -= offset; y_offset += 0; } else { x_offset += offset; y_offset += 0; } bullets[bid] = {'x': player['x'] + x_offset, 'y': player['y'] + y_offset, 'direction': direction, 'bid': bid, 'color': data['color'], 'id': id} }) socket.on('player_color_change', function(data) { let player_id = data['id'] players[player_id]['color'] = data['color'] console.log('changing colors') }) socket.on('disconnect', function(data) { console.log('player has disconnected') }); });

Next, I created functions that react to websocket events. When somebody loads the webpage, it calls the main function and find which function to call next. Based on the event name, the server either creates a new player, syncs the data, etc.

The first function handles player creation. It adds the player's information to the players data structure. The sync function packages the global variables of the server and sends it to the client. The next function updates the player's information if they made any movement. The shooting function is a more complicated function because it handles the bullets. Based off of the direction the player is going, the bullet will travel that same direction. This can be seen in the four conditional statements. The x and y coordinates of the bullet will depend on the direction. For example, if the player is going up, the x coordinate of the bullet will stay the same, but the y coordinate will be increased so that it appears above the player. This is done by adding an offset to the specified coordinate. The bullet is now packaged into a dictionary and added the bullets data structure. The second to last function handles player color change. It updates the player's color to the players data structure. The disconnect function currently does nothing, which is intended. Disconnected players should still exist in the game, so if somebody kills them, then the player will die and the scores will be updated.

function collideCircleCircle(p1x, p1y, r1, p2x, p2y, r2) { let a; let x; let y; a = r1 + r2; x = p1x - p2x; y = p1y - p2y; if (a > Math.sqrt((x * x) + (y * y))) { return true; } else { return false; } }

This function returns whether two circles have collided.

function tick() { for (let key in bullets) { let offset = 5; let bullet = bullets[key]; let direction = bullet['direction']; if (direction === 'up') { bullet['y'] -= offset; } else if (direction === 'down') { bullet['y'] += offset; } else if (direction === 'left') { bullet['x'] -= offset; } else { bullet['x'] += offset; } if (bullet['x'] > canvas_size || bullet['x'] < 0) { delete bullets[key] } if (bullet['y'] > canvas_size || bullet['y'] < 0) { delete bullets[key] } for (let p_key in players) { let player = players[p_key] let player_x = player['x'] let player_y = player['y'] let killer_score = players[bullet['id']]['score'] let x = bullet['x'] let y = bullet['y'] let hit = collideCircleCircle(x, y, 15, player_x, player_y, 15); if (hit && bullet['id'] != p_key) { player['health'] -= 20 delete bullets[key] if (player['health'] <= 0) { player['alive'] = false players[bullet['id']]['score'] += 10 shadows[Math.round(Math.random() * 10000)] = {'x': player_x, 'y': player_y, 'r1': 15 + (killer_score / 10) * 2, 'r2': 35 + (killer_score / 10) * 2, 'rgb': player['rgb'], 'duration': 2000 } io.sockets.emit('player_death', player) delete players[p_key] } } } } for (let key in shadows) { let shadow = shadows[key] shadow['duration'] -= 1 shadow['rgb'] = [shadow['rgb'][0] + 0.2, shadow['rgb'][1] + 0.2, shadow['rgb'][2] + 0.2] if (shadow['duration'] <= 0) { delete shadows[key] } } io.sockets.emit('sync', {'players': players, 'bullets': bullets, 'shadows': shadows}) }

The tick function is a complicated function because it handles what happens to the data at every tick. First of all, it updates each bullet that was created. Depending on the direction it was shot in, the x or y coordinate will be updated so that it travels in that direction. It also checks if any bullet is outside the canvas because those bullets will become 'lost' and just use up precious memory. After that, I handled player an bullet collision. Using the function I created earlier, I check if any players have collided with any bullets. If so, the player's health is reduced and if the player's health is below 0, the player is killed. Dead players will leave behind a star. Stars are also updated every tick. Its color will slowly increase until it become white and it eventually dies after the its duration reaches 0.

setInterval(function() { try { tick() } catch(err) { console.log(err) } }, 10); app.use('/maze/', express.static('maze'))

The last part of this code causes the tick function to run every 10 ms. This creates a smooth experience for players because the data will be rapidly updating. The last line is to serve the client side code, which I will explain now.

Client Code

const speed = 5; const player_size = 50; const canvas_w = 1200; const canvas_h = 800; const naturalKeyNames = ['A', 'B', 'C', 'D', 'E', 'F', 'G']; const ip = 'localhost'; var socket = io.connect(ip + ':8080'); var words; var bg, start, button, red, green, blue, menu, canvas; // Player Info var player_name, player_id, player_id, player_rgb, player_x, player_y, player_info; var player_score = 0; var player_health = 100; var player_direction = 'up'; var player_alive = true; var in_game = false; var players = {}; var bullets = {}; var shadows = []; var sounds = [];

I first created global variables to store player information and basic game information. The constants hold canvas information and sound file names. The last block of variables will contain information sent from the server.

function preload() { bg = loadImage('assets/bg3.jpg') start = loadImage('assets/start.png') words = loadJSON('assets/words.json') for (let i = 0; i < naturalKeyNames.length; i++) { sounds.push(loadSound(String('assets/reg-' + naturalKeyNames[i] + '.mp3'))); } }

I first preloaded game assets, which includes the background image, the center lobby image, a list of random nouns, and piano sounds. The words will form random names for players and the sounds will play if the player dies.

function setup() { canvas = createCanvas(canvas_w, canvas_h); let menu = select('.drop') let dropdown = select('.dropdown') let input_name = select('#name_i') let input_red = select('#red_i') let input_green = select('#green_i') let input_blue = select('#blue_i') let sub_name = select('#name_s') let sub_color = select('#color_s') menu.mouseOver(function() { dropdown.show(300) }) menu.mouseOut(function() { dropdown.hide(300) }) sub_name.mousePressed(function() { player_name = input_name.value() }) sub_color.mousePressed(function() { player_rgb = [clean_color_input(input_red.value()), clean_color_input(input_green.value()), clean_color_input(input_blue.value()) ] }) lobby() socket.on('sync', sync) socket.on('player_score', increment_score) socket.on('player_death', death) } function lobby() { player_id = Math.round(random(100000)); player_x = 50 + random(canvas_w - 50); player_y = 35 + random(canvas_h/2 - 175); let r = random(255); let g = random(255); let b = random(255); player_name = words.words[Math.floor(Math.random()*words.words.length)] + ' ' + words.words[Math.floor(Math.random()*words.words.length)]; player_rgb = [r, g, b]; draw_player(player_name, player_x, player_y, player_rgb, player_health) console.log(player_name) } function draw_player(name, x, y, rgb, health) { fill('white'); text(name, x - (25 + name.length), y - 30); fill(rgb); ellipse(x, y, player_size, player_size); fill('white') text(String(health), x - 9, y + 4) } function clean_color_input(color) { color = parseInt(color); if (isNaN(color)) { return random(255); } return color; } function changeColor() { if (player_alive) { player_rgb = [red.value(), green.value(), blue.value()] socket.emit('player_color_change', {'color': player_rgb, 'id': player_id}) } }

The setup function creates the canvas with the specified global dimensions. It also creates the settings menu for players and defines functions that allows the player to change their name or color. There are helper functions that clean the color input, if the player enters invalid values and sends it to the server. As an important note, there should be one for name too, but I forgot to include it. The lobby function creates the player at a random location that is not in the middle, and gives a random name. The draw_player function draws the player on the canvas, with their color, health, and name.